Since the 1987 mandate in Texas to identify and serve gifted students at all grade levels, school districts have implemented a variety of practices to address the identification standards outlined in the Texas State Plan for the Education of Gifted/Talented Students (TEA, 2009). In brief, the Texas State Plan identifies these 10 identification standards for districts to be in compliance (TEA, 2009, pp. 3–7):

- Written Board-approved identification policies are disseminated to parents (1.1C);

- Provisions in the Board-approved policies regarding transfers, furloughs, reassessments, exiting, and appeals are included (1.2C);

- Annual identification of students showing potential in each area of giftedness is conducted (1.3.1C, 1.3.2C);

- Students in grades K–12 should be identified (1.4C);

- Data are collected from multiple sources for each area of giftedness (1.5.1C);

- Assessments are in a language students understand or are nonverbal (1.5.2C);

- At least three criteria are used to identify K–12 students for services in each area of giftedness offered by the school district (1.5.3C, 1.5.4C, 1.5.5C);

- Qualitative and quantitative measures need to be included within the criteria (1.5.4C);

- Access to assessment is available to all students in the school district (1.6C); and

- A committee of at least three district or campus educators who have training in nature and needs of gifted and talented students review data (1.7C).

Although most of us are very familiar with these identification standards, I believe it is important to review them from time to time and consider how they might be implemented in your school and how they might relate to student outcomes. On the chart below, check yourself by identifying the following examples as mostly good or poor practices to see how your thinking aligns with researchers in the field of gifted education regarding the best practices in the identification of gifted and talented students.

Answers to Mostly a Good Practice and Mostly a Poor Practice

- Poor

The assessments that are used in the identification process need to be aligned to the talent domain and to the goals of the program (Johnsen, 2012). Within the context of schooling, creativity is best examined within the academic domain itself (Wai, Lubinski, & Benbow, 2005) because of the difficulty in establishing a relationship between creativity tests and their prediction of substantial accomplishments (Renzulli, 2005). For example, with domain-based creativity a teacher might collect products that show the many different ways a student solves a math problem or the variety of metaphors used in writing an essay. If schools are interested in examining creativity beyond the academic domains such as in the arts, then a consensual assessment approach appears to have the most research support (Amabile, 1982, 1996). In this approach, experts in a particular field such as art, music, or theater use their judgment and experience to evaluate creative products and performances. Even at the elementary level strong agreement exists among raters in judging students’ performances (Baum, Owen, & Oreck, 1996). - Poor

In this example, the teacher is used as the sole source of referral for additional testing and acts as a gatekeeper for the identification process. Research studies indicate that teachers’ perceptions may be influenced by preconceived notions of giftedness such as gender stereotypes (Siegle & Powell, 2004), academic achievement (Hunsaker, Finley, & Frank, 1997), socioeconomic background (Hunsaker et al., 1997), verbal ability and social skills (Speirs Neumeister, Adams, Pierce, Cassady, & Dixon, 2007). Although dynamic (e.g., testing, teaching, and retesting) and curriculum-based assessments (e.g., observations of problem solving or curricular tasks) can help with identifying students from traditionally underrepresented groups (Borland, 2014), teachers will still need professional development to understand all aspects of giftedness (Briggs, Reis, & Sullivan, 2008). - Poor

In this example, similar to the teacher nomination above, a single source is used. In this case, the gatekeeper is a quantitative measure instead of a qualitative assessment. Nonverbal tests have been viewed as reducing linguistic, cultural, or economic obstacles that keep underrepresented groups from accessing gifted and talented services (Naglieri & Ford, 2003). On the other hand, some researchers have challenged this assumption by suggesting that nonverbal tests do not predict performance in academic domains (Lohman, 2005b). Although data are mixed regarding the predictive validity of various nonverbal assessments, the Test of Nonverbal Intelligence (TONI), for example, was able to predict Hispanic children’s cognitive development (Gonzalez, 1994) and was also able to predict scores on achievement tests (Mackinson, Leigh, Blennerhassett, & Anthony, 1997). While both camps would support the use of nonverbal tests with English language learners, any test needs to be supplemented with other data and matched to student characteristics (Worrell & Erwin, 2011). In this way, students are able to demonstrate their talents in areas that are not assessed by one measure. Moreover, when the majority of students are in populations that are traditionally underrepresented in gifted programs, the school district may need to consider the development of local norms. National norms are built on the assumption that all students are afforded a similar educational opportunity (Lohman & Lakin, 2008). Since this is not the case, local norms are able to account for differences in socioeconomic status, race/ethnicity, and parental education (Worrell & Erwin, 2011). - Good

This example uses multiple sources (e.g., teachers, parents, and students) to nominate students for the gifted program. Similar to the need for professional development of teachers in understanding all aspects of giftedness, parents also need to understand the characteristics of gifted and talented students and receive nomination forms that list observable behaviors (Worrell & Erwin, 2011). Parents do provide important information about behaviors that might not be observed at school such as interests and completing academic work at home (Lee & Olszewski-Kubilius, 2006). When trained, teachers are reliable sources of identification information and are best for providing information about psychosocial aspects of high functioning such as student motivation, self-regulation, and task commitment (Borland, 2014). Although some researchers have supported the reliability of peer nominations (Cunningham, Callahan, Plucker, Roberson, & Rapkin, 1998; Johnsen, 2011), others suggest that peers may be influenced by popularity (Blei & Pfeiffer, 2007). Therefore, all sources of information should have training to increase their understanding of gifts and talents and have checklists that assess observable behaviors in different domains to increase their reliability across observers. - Poor

In this example, similar to the teacher nomination and the nonverbal assessment above, a single source is used. Moreover, the STAAR test is not intended to identify students for gifted and talented programs and will most likely have a ceiling effect for advanced students. In other words, there are not enough items on a grade-level test to examine above-level performance (Swiatek, 2007). Another weakness is that students whose language and academic skills differ from those on the state’s high-stakes test may be regarded as academically deficient and not suited to high levels of academic challenge (Gallagher, 2004). While achievement tests are certainly good sources of information, they assess what a student has already acquired in or outside of school. Schools need to consider whether or not the goal of the program is to serve students who already are clearly more advanced than their peers or those who have the potential (Lohman, 2005a). Remember that the state’s definition clearly states, “gifted and talented students mean a child or youth who performs at or shows the potential for performing at a remarkable high level of accomplishment” (Texas Education Code, 29.121.Definition, TEA, 1997, p. 18). - Poor

Setting a cutoff within the top 2% or a 130 standard score does not consider the standard error of measurement (SEM). Since all tests have some error, a single test score should be viewed as an estimate of a student’s actual performance. For example, suppose that Kori scored 125 on an intelligence test with an SEM of 5 points—missing the school district’s cutoff by 5 points. One would expect that 68%of the time her true score would be within the range of 120–130 (adding and subtracting one SEM from 125); 95% of the time, within the range of 115–135 (adding and subtracting two SEMs from 125); and 99% of the time, within the range of 110–140 (adding and subtracting three SEMs from 125). In interpreting Kori’s score, if she were to take the test again, she might conceivably score within the above average (e.g., 110) to very superior (e.g., 140) range 99% of the time (Johnsen, 2011). Given the SEM and errors associated with any test’s ability to predict long-term performance, a school district’s goal should be to set cut offs that serve the largest number possible, not restrict students whose potential needs to be recognized and developed. - Good

This example uses multiple sources (student, parent teacher), and varies the formats (portfolio, checklists, tests), which can identify individual student strengths within a specific domain. Portfolios are able to showcase skills and are predictive of future performance (Johnsen & Ryser, 1997; VanTassel-Baska, 2008). Educators should be cautious, however, that items within the portfolios represent not only classroom projects but also students’ interests and talents within and outside of the school setting (Briggs et al., 2008) - Poor

Setting different criteria for different schools raises access concerns according to the Office for Civil Rights (Trice & Shannon, 2002). All aspects of the identification process should be applied in a nondiscriminatory manner. In addition, researchers suggest that differences among ethnic/racial groups on intelligence tests are manifestations of an achievement gap, not bias (Frisby & Braden, 1999; Reynolds & Carson, 2005; Worrell, 2005). To increase the inclusion of underrepresented populations, these practices are recommended:

- Training teachers in multicultural awareness so that they recognize diverse talents (Briggs & Reis, 2004; Ford, Moore, & Milner, 2005),

- Implementing talent development opportunities prior to identification (e.g., front-loading; Briggs et al., 2008),

- Assessing pre-skills—those that would lead to advanced skills within a domain (Worrell & Erwin, 2011),

- Using performance and alternative assessments such as observations of students during enriched lessons and student work portfolios (Briggs et al., 2008),

- Emphasizing informal assessments versus formal assessments (Briggs et al., 2008), and

- Using multiple indicators of gifted behaviors (Frasier & Passow, 1994).

- Poor

The assessments need to be aligned to the program and to each student’s characteristics. For example, ability might be measured differently for students who are non-English speakers versus those who are fluent in English. Multidimensional assessments need to address appropriately diversified services and the diversity of students (Robinson, Shore, & Enersen, 2007). - Good

In this example, the Board has outlined its policies and the parents are following them (TEA, 2009). - Poor

This approach has a number of problems. First, combining scores doesn’t identify the strengths of individual students. Examining each criterion separately allows the committee to look for the student’s best performance and provides information for programming (Johnsen, 2011). Second, as mentioned previously, rigid cutoff numbers do not consider measurement error, neither do ratings from 1 to 5. Is there really a true difference in point values when considering a student who scored at the 94th percentile versus the 95th percentile? Third, the approach is statistically unsound. Standard or index scores can be manipulated (e.g., added together) but ratings cannot (see Johnsen, 2011). Case study approaches are much better in identifying students’ strengths and needs. - Poor

Intelligence tests are a good predictor of school performance but are just one source of information. Multiple sources and criteria should be used in making decisions regarding a student’s gifts and talents. Intelligence tests are also not sufficient in predicting outstanding achievement or eminence and should be used along with domain-specific assessments (Terman, 1925; Worrell & Erwin, 2011). - Poor

This example is primarily a program services problem. The program needs to provide a learning continuum of service options (TEA, 2009). They need to be comprehensive and cohesive so that students’ talents can be developed beginning in kindergarten through grade 12. If a continuum is present, then there is no need for reevaluating students as they transition from the elementary to the middle school. - Good

Assuming that the committee members have received training in gifted education (TEA, 2009), this example is an excellent practice because it involves educators with different perspectives who review all of the assessment information. Including special educators also assists the committee in identifying twice-exceptional students—those with gifts and disabilities. Twice-exceptional students may be overlooked because their deficits may hide their gifts and vice versa (Pereles, Baldwin, & Omdal, 2011). - Good

The school district in this example has aligned its assessments to services and to students. They understand that students have different strengths that need to be recognized and developed through appropriate services.

In reviewing all of the examples, these best practices emerged as those most often supported by researchers and the Texas State Plan (TEA, 2009):

- policies should provide a framework for identification procedures and due process;

- all involved in the identification process should receive professional development, which should include multicultural awareness;

- identification procedures are conducted consistently and reliably across the school district so that all students have equal access to services;

- assessments need to be specific to each student and matched to program services;

- multiple sources are important in providing a comprehensive picture of the student’s gifted behaviors;

- along with standardized tests, multiple forms of assessments should be used (e.g., portfolios, dynamic, performance, curriculum-based, checklists);

- technically sound instruments should have sufficient ceiling to assess the advanced knowledge and skills of gifted students;

- the interpretation of assessments should be conducted by individuals knowledgeable about gifted education and tests and measurement (e.g., reliability and validity);

- the selection committee needs to be comprised of educators across specialties to ensure the identification of twice-exceptional students;

- special attention needs to be paid to underrepresented populations; and

- cutoff scores should consider the standard error of measurement and should not be rigidly applied because of various contextual factors—case studies are best.

Learner Outcomes

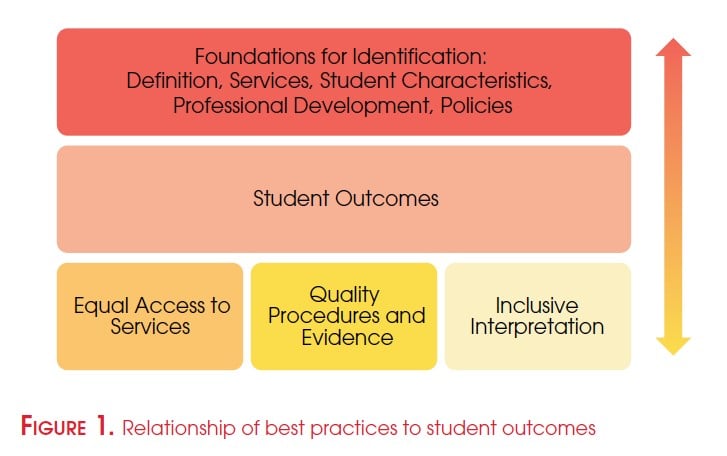

Implementing these best practices should lead to the desired student outcome of identifying all students who need gifted education services. Desired student outcomes might include whether or not equal percentages of students are being nominated, identified, and selected across schools; how identified students’ succeed and are retained in the programs; and/or the how the program reflects the school district’s demographics (see NAGC, 2010, and TEA, 2009). The school’s annual evaluation (see 5.3C in TEA, 2009) might examine how the foundations for identification influence practices and how both of these influence student outcomes (see Figure 1).

Foundations such as the school district’s definition of gifted and talented, its array of services in the core areas, student characteristics within the district, and board policies will influence both practices and outcomes. For example, an emphasis on “potential” as opposed to “achievement” within the definition may influence the types of assessments that are used to identify students. Teachers may use more talent development activities in their observations, assess pre-skills within a domain, and collect portfolios over a semester to determine each student’s potential for advanced programming. This focus on potential, in turn, might influence the student outcome of increasing the percentage of students from poverty identified. Similarly, practices related to a more inclusive interpretation of assessment information, such as considering the standard error of measurement when making decisions and using case studies as opposed to matrices, will influence the professional development of educators who are on the selection committee and school district policies—foundations for identification. In summary, foundations for identification and best practices work together in influencing each school’s desired student outcomes.

Summary

The Texas State Plan for the Education of Gifted/Talented Students (TEA, 2009) has identified 10 identification standards for school districts to be in compliance. Although school districts may select the ways that they implement these standards, researchers have identified best practices for the identification of gifted and talented students. These practices relate to policies, professional development, equal access to services, alignment of assessments to each student and to program services, underrepresented populations, quality sources and types of assessments, inclusive interpretations of assessments, and professional development of educators involved in the identification process. School districts need to evaluate the foundations for their identification procedures and practices annually to ensure that desired student outcomes are achieved. When educators work together and embrace best practices, students who need services in gifted education will be identified.

References

Amabile, T. M. (1982). Social psychology of creativity: A consensual assessment technique. Journal of Personality and Social Psychology, 43, 997–1013.

Amabile, T. M. (1996). Creativity in context. Boulder, CO: Westview Press.

Baum, S., Owen, S. V., & Oreck, B. A. (1996). Talent beyond words: Identification of potential talent in dance and music in elementary students. Gifted Child Quarterly, 40, 93–101.

Blei, S., & Pfeiffer, S. I. (2007). Peer ratings of giftedness: What the research suggests? Unpublished monograph, Gifted Research Center, Florida State University, Tallahassee, FL.

Borland, J. H. (2014). Identification of gifted students. In C. M. Callahan, & J. A. Plucker (Eds.), Critical issues and practices in gifted education: What the research says (2nd ed., pp. 323–342). Waco, TX: Prufrock Press.

Briggs, C. J., & Reis, S. M. (2004). Case studies of exemplary gifted programs. In C. A. Tomlinson, D. Y. Ford, S. M. Reis, C. J. Briggs, & C. A. Strickland (Eds.), In search of the dream: Designing schools and classrooms that work for high potential students from diverse cultural backgrounds (pp. 5–32). Washington, DC: National Association for Gifted Children.

Briggs, C. J., Reis, S. M., & Sullivan, E. E. (2008). A national view of promising programs and practices for culturally, linguistically, and ethnically diverse gifted and talented students. Gifted Child Quarterly, 52, 131–145.

Cunningham, C. M., Callahan, C. M., Plucker, J. A., Roberson, S. C., & Rapkin, A. (1998). Identifying Hispanic students of outstanding talent: Psychometric integrity of a peer nomination form. Exceptional Children, 64, 197–209.

Ford, D. Y., Moore, J. L., III, & Milner, H. R. (2005). Beyond colorblindness: A model of culture with implications for gifted education. Roeper Review, 27, 97–103.

Frasier, M. M., & Passow, A. H. (1994). Toward a new paradigm for identifying talent potential (RM9412). Storrs: University of Connecticut, The National Research Center on the Gifted and Talented.

Frisby, C. L., & Braden, J. P. (Eds.). (1999). Bias in mental testing [Special issue]. School Psychology Quarterly, 14(4).

Gallagher, J. J. (2004). No Child Left Behind and gifted education. Roeper Review, 26, 121–123.

Gonzalez, V. (1994). A model of cognitive, cultural, and linguistic variables affecting bilingual Hispanic children’s development of concepts and language. Hispanic Journal of Behavioral Sciences, 16, 396–421.

Hunsaker, S. L., Finley, V. S., & Frank, E. L. (1997). An analysis of teacher nominations and student performance in gifted programs. Gifted Child Quarterly, 41, 19–24.

Johnsen, S. K. (Ed.). (2011). Identifying gifted students: A practical guide (2nd ed.). Waco, TX: Prufrock Press.

Johnsen, S. K. (2012). School-related issues: Identifying students with gifts and talents. In. T. L. Cross & J. R. Cross (Eds.), The handbook for school counselors serving gifted students: Development, relationships, school issues, and counseling needs/interventions (pp. 463–475). Waco, TX: Prufrock Press.

Johnsen, S. K., & Ryser, G. R. (1997). The validity of portfolios in predicting performance in a gifted program. Journal for the Education of the Gifted, 20, 253–267.

Lee, S.-Y., & Olszewski-Kubilius, P. (2006). Comparison between talent search students qualifying via scores on standardized tests and via parent nomination. Roeper Review, 28, 157–166.

Lohman, D. F. (2005a). An aptitude perspective on talent: Implications for identification of academically gifted minority students. Journal for the Education of the Gifted, 28, 333–360.

Lohman, D. F. (2005b). The role of nonverbal ability tests in the identification of academically gifted students: An aptitude perspective. Gifted Child Quarterly, 49, 111–138.

Lohman, D. F., & Lakin, J. (2008). Nonverbal test scores as one component of an identification system: Integrating ability, achievement, and teacher ratings. In J. L. VanTassel-Baska (Ed.), Alternative assessments for identifying gifted and talented students (41–66). Waco, TX: Prufrock Press.

Mackinson, J. A., Leigh, I. W., Blennerhassett, L., & Anthony, S. (1997). Validity of the TONI-2 with deaf and hard of hearing children. American Annals of the Deaf, 142, 294–299.

Naglieri, J. A., & Ford, D. Y. (2003). Addressing underrepresentation of gifted minority children using the Naglieri Nonverbal Ability Test (NNAT). Gifted Child Quarterly, 47, 155–160.

National Association for Gifted Children (2010). NAGC Pre-K–grade 12 gifted programming standards: A blueprint for quality gifted education programs. Washington, DC: Author.

Pereles, D., Baldwin, L., & Omdal, S. (2011). Addressing the needs of students who are twice-exceptional. In M. R. Coleman & S. K. Johnsen (Eds.), RtI for gifted students: A CEC-TAG educational resource (pp. 63–86). Waco, TX: Prufrock Press.

Renzulli, J. S. (2005). The Three-Ring Conception of Giftedness: A developmental model for promoting creative productivity. In R. J. Sternberg & J. E. Davidson (Eds.) Conceptions of giftedness (2nd ed., pp. 246–279). New York, NY: Cambridge University Press.

Reynolds, C. R., & Carson, D. (2005). Methods for assessing cultural bias in tests. In C. L. Frisby & C. R. Reynolds (Eds.), Comprehensive handbook of multicultural school psychology (pp. 795–823). Hoboken, NJ: Wiley.

Robinson, A., Shore, B. M., & Enersen, D. L. (2007). Best practices in gifted education. Waco, TX: Prufrock Press.

Siegle, D., & Powell, T. (2004). Exploring teacher biases when nominating students for gifted programs. Gifted Child Quarterly, 48, 21–29.

Speirs Neumeister, K. L., Adams, C. M., Pierce, R. L., Cassady, J. C., & Dixon, F. A. (2007). Fourth-grade teachers’ perceptions of giftedness: Implications for identifying and serving diverse gifted students. Journal for the Education of the Gifted, 30, 479–499.

Swiatek, M. A. (2007). The talent search model past, present, and future. Gifted Child Quarterly, 51, 320–329.

Terman, L. M. (1925). Genetic studies of genius: Vol. I. Mental and physical traits of a thousand gifted children. Stanford, CA: Stanford Press.

Texas Education Agency. (2009). Texas state plan for the education of gifted/talented students. Austin, TX: Author.

Trice, B., & Shannon, B. (2002, April). Office for Civil Rights: Ensuring equal access to gifted education. Paper presented at the annual meeting of the Council for Exceptional Children, New York.

VanTassel-Baska, J. (2008). Using performance-based assessment to document authentic learning. In J. L. VanTassel-Baska (Ed.), Alternative assessments with gifted and talented students (pp. 129–146). Waco, TX: Prufrock Press.

Wai, J., Lubinski, D., & Benbow, C. P. (2005). Creativity and occupational accomplishments among intellectually precocious youths: An age 13 to age 33 longitudinal study. Journal of Educational Psychology, 97, 484–492.

Worrell, F. C. (2005). Cultural variation within American families of African descent. In C. L. Frisby & C. R. Reynolds (Eds.), The comprehensive handbook of multicultural school psychology (pp. 137–172). Hoboken, NJ: Wiley.

Worrell, F. C., & Erwin, J. O. (2011). Best practices in identifying students for gifted and talented education programs. Journal of Applied School Psychology, 27, 319–340.

Susan K. Johnsen, Ph.D., is Professor Emeritus in the Department of Educational Psychology at Baylor University in Waco, TX, where she directed the Ph.D. program and programs related to gifted and talented education. She is editor of Gifted Child Today and coauthor of Identifying Gifted Students: A Practical Guide, the Independent Study Program, RTI for Gifted Students, Using the National Gifted Education Standards for University Teacher Preparation Programs, Using the National Gifted Education Standards for PreK–12 Professional Development and more than 250 articles, monographs, technical reports, and other books related to gifted education. She has written three tests used in identifying gifted students: Test of Mathematical Abilities for Gifted Students (TOMAGS), Test of Nonverbal Intelligence (TONI-4), and Screening Assessment Gifted Students (SAGES-2). She serves on the Board of Examiners of the National Council for Accreditation of Teacher Education and is a reviewer and auditor of programs in gifted education. She is past president of The Association for the Gifted (TAG) and past president of the Texas Association for the Gifted and Talented (TAGT). She has received awards for her work in the field of education, including NAGC’s President’s Award, CEC’s Leadership Award, TAG’s Leadership Award, TAGT’s President’s Award, TAGT’s Advocacy Award and Baylor University’s Investigator Award, Teaching Award, and Contributions to the Academic Community.